Mastering Continuous Improvement Process Steps

- shems sheikh

- Nov 20, 2025

- 14 min read

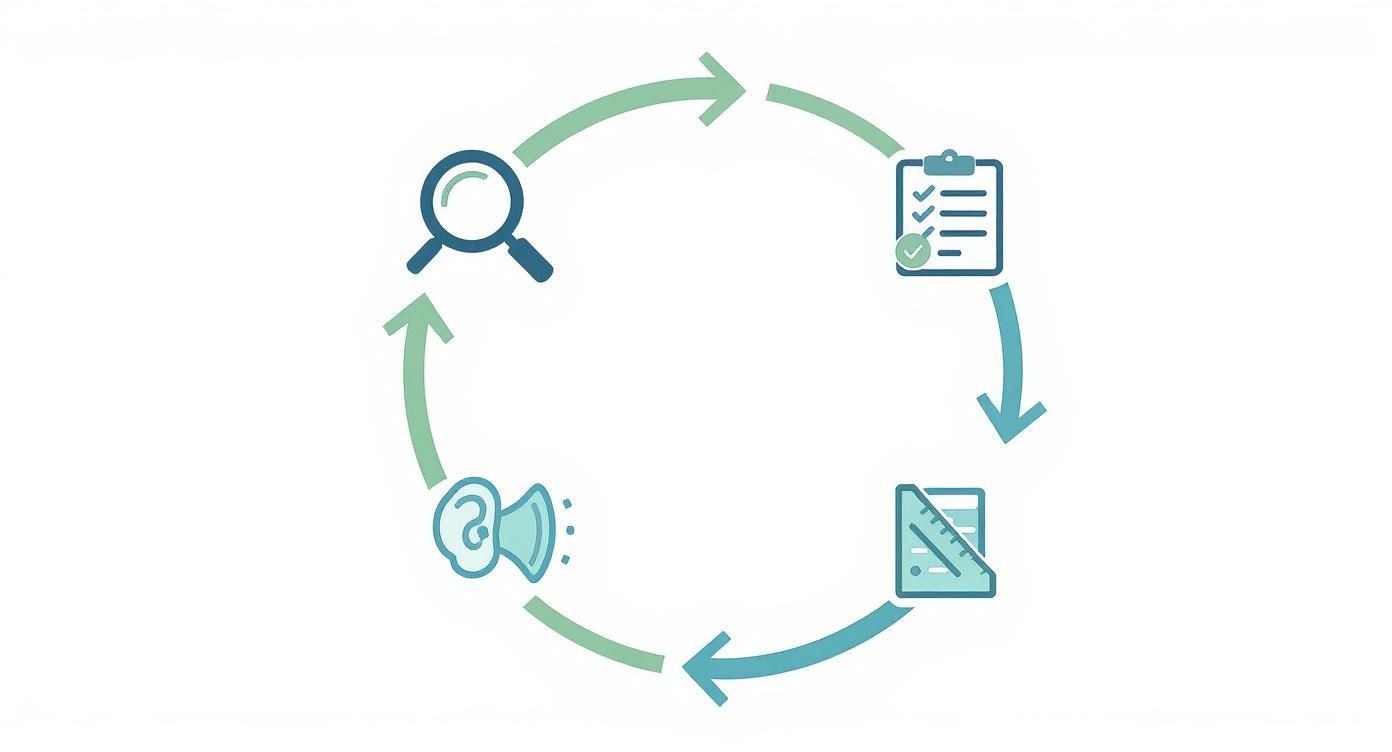

At its heart, the continuous improvement process is a simple loop: you spot an opportunity, get some feedback on it, decide what to tackle first, and then measure whether your changes actually worked. This isn't some massive, one-off project. It’s a system you run over and over again, letting small wins stack up into major gains.

Why Continuous Improvement Is a Survival Skill

In a world where user expectations are always climbing, standing still is the same as falling behind. Let's be honest, continuous improvement isn't just a "nice-to-have" philosophy anymore; it's a core business function that separates the teams that are thriving from the ones just trying to keep their heads above water. It’s the engine that powers everything from product updates and user experience tweaks to making your own team more efficient.

This whole approach is about turning vague goals like "make the website better" into a concrete, repeatable system. For any product or web team, it's the roadmap for converting abstract ideas into results you can actually see and measure.

The Tangible Benefits of a Structured Process

Putting a formal improvement cycle in place isn't just about feeling more organized—it delivers real, tangible returns. Companies that get serious about process improvement see productivity jump by an average of 20-30%, and 70% report being more efficient within the first year. These aren't just minor adjustments, either. Some lean process improvements can slash project cycle times by up to 50%.

This is the kind of structured methodology that top-tier companies use to stay ahead of the pack. You can find more insights on how these improvements impact business outcomes in this post about what top companies possess.

The goal is to create a dynamic loop, not a linear checklist. Each improvement, no matter how small, feeds into the next, creating momentum that builds on itself.

Here’s a quick visual of how these core steps flow together.

As you can see, it’s a circle. The "measure" phase feeds directly back into identifying what to tackle next, keeping the engine of growth running.

Making Improvement a Habit, Not a Project

The most successful teams I've seen don't treat this like a task. They bake it into their culture. It becomes a shared mindset where everyone, from developers to designers, feels empowered to spot friction and suggest a better way.

This is where modern tools like Beep really change the game, especially for the feedback and iteration stages. By letting your team (and clients!) drop visual, contextual feedback right on a webpage, you cut out so much of the back-and-forth. The whole process of finding, discussing, and fixing issues becomes faster and a whole lot less painful.

Right, let's get into it. Before you even think about improving a single pixel, you have to know what "better" actually means. It’s the first—and honestly, the most critical—step in any continuous improvement process. If you skip this, your team is basically flying blind, making changes based on gut feelings and opinions instead of a unified target.

You've probably heard goals like "improve the user experience" or "make the checkout faster." They sound good, but they're useless for driving real action. Vague goals just create confusion, which leads to wasted time and features that completely miss the mark. A clear goal is your North Star; it makes sure everyone, from engineering to marketing, is pulling in the same direction.

From Vague Ideas to Specific Targets

So how do you turn a fuzzy idea into something your team can actually execute on? Product managers have a few tried-and-true frameworks for this. These aren't just corporate buzzwords; they're tools for bringing clarity and alignment to your work.

SMART Goals: This one’s a classic for a reason. It forces you to make your goals Specific, Measurable, Achievable, Relevant, and Time-bound. So, "improve UX" becomes something concrete like, "Reduce support tickets related to user profile navigation by 15% by the end of Q2." See the difference?

Objectives and Key Results (OKRs): OKRs are great for connecting ambitious goals (Objectives) with tangible outcomes (Key Results). Your Objective might be to "Create a frictionless checkout experience," and your Key Results would be things like "Decrease cart abandonment rate from 25% to 15%" and "Achieve a checkout completion time of under 90 seconds."

Using structures like these forces you to put a number on what victory looks like. It’s not about micromanaging; it’s about giving everyone a clear finish line they can see and sprint toward together.

A well-defined goal is more than a metric; it’s a communication tool. It tells everyone on the team, 'This is what we are building, this is why it matters, and this is how we will know we’ve succeeded.'

Crafting a Shared Understanding of Success

Once you’ve got your target locked in, the last piece of the puzzle is getting universal buy-in. A product manager might be the one to define the goal, but it takes the entire team to actually hit it. That means the goal needs to be visible, understood, and agreed upon by everyone involved.

Let's say a team sets a goal to "Increase mobile user engagement." Still a bit too broad. A much better, more specific goal would be: "Increase the daily active usage of the mobile app's core feature by 20% over the next three months."

Suddenly, that specific target gives the whole team focus:

Designers can start asking: "What UI changes will make this feature more intuitive on mobile?"

Developers can think about: "How can we boost the performance and load time of this feature to make it snappier?"

Marketers can plan: "What in-app campaigns can we run to remind users about this feature?"

This kind of clarity turns a line item on a roadmap into a shared mission. It gets everyone fired up and sets the stage for everything that comes next.

Gathering Actionable Feedback, Not Just Noise

Alright, you've got your goals locked in. What's next? It's time to figure out where the real opportunities are hiding. And I don't mean collecting a mountain of random opinions; I'm talking about systematically gathering high-quality, actionable insights that point you straight toward your target. Think of good feedback as the fuel for your improvement engine.

So many teams fall into the trap of only looking at one type of data. They'll obsess over analytics dashboards but completely ignore the human story behind the numbers. Or they’ll listen to a couple of really vocal customers without checking if their experience is the norm. The secret is to blend both quantitative and qualitative feedback to get the full picture.

Blending Data with Human Stories

To really get what's happening with your users, you need to understand both the "what" and the "why." This means pairing hard data with human context so you can make decisions that actually hit the mark.

Quantitative Data (The What): This is the hard evidence you pull from tools like Google Analytics or your own internal metrics. It tells you what users are doing. For instance, you might see that a whopping 70% of users bail on a specific page in your signup flow. That’s a clear "what."

Qualitative Data (The Why): This is the context you get from user interviews, surveys, and visual feedback. It tells you why they're doing it. That 70% drop-off? It might be because of a confusing form field or a button that’s completely broken on mobile.

Without the "why," you’re just guessing at a solution. Combining these two is non-negotiable if you want to solve the right problems.

A classic mistake I see is teams treating all feedback as equal. An off-the-cuff comment from a stakeholder just doesn't carry the same weight as a bug report from ten different users all hitting the same wall. Your job is to find the patterns in the noise.

Making Feedback Visual and Contextual

One of the biggest drags on any improvement cycle is the soul-crushing back-and-forth it takes to understand a simple bug report. Emails like "the button isn't working" are black holes of productivity. This is where visual feedback tools completely change the game.

Tools like Beep let users click directly on any element on your live website and drop a comment right there. It provides immediate, unambiguous context that a long-winded email could never capture.

Instead of a vague message, your developer instantly sees the exact element, the user's comment, and a screenshot. No more guesswork.

This visual approach cuts right through the confusion. When a user leaves a comment, the tool automatically grabs all the crucial technical details you need:

A screenshot showing precisely what the user was looking at.

Browser and OS details so your devs can replicate the issue.

Screen resolution to understand the user's viewport.

Suddenly, a low-quality bug report transforms into a perfectly documented, actionable task in seconds. It speeds up the entire iteration process like you wouldn't believe. For a deeper dive, check out our guide on how to gather customer feedback that actually drives growth.

By gathering feedback this way, you build a rich library of insights that are already teed up and ready for prioritization.

Prioritizing Improvements With Confidence

So, you've opened the floodgates and the feedback is pouring in. Awesome! But now comes the real challenge: figuring out what to do first. It's easy to get overwhelmed by a mountain of ideas, bug reports, and suggestions, leading to a classic case of analysis paralysis.

The goal here is to shift your team out of a purely reactive mode—just fixing whatever's broken or shouting the loudest—and into a proactive strategy. You want to focus your precious time and resources on the changes that will actually make a difference.

This is where a good prioritization framework becomes a game-changer. It gives you an objective way to sift through the noise, letting your team make smart, data-informed decisions instead of just going with their gut.

Choosing Your Prioritization Framework

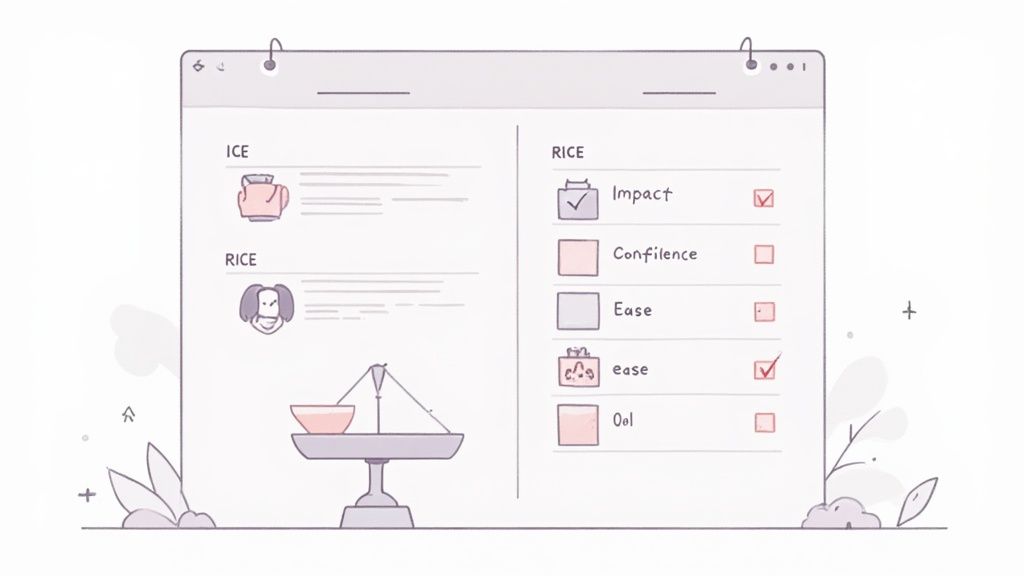

Every team is different, but for most product and web teams, a couple of frameworks stand out for being both effective and easy to get started with: ICE and RICE.

ICE (Impact, Confidence, Ease): This is a fantastic, straightforward starting point. You score each potential improvement (usually on a scale of 1-10) across three simple factors. Impact is how much it will move the needle on your goals. Confidence is how sure you are about that impact. And Ease is how simple it will be to implement.

RICE (Reach, Impact, Confidence, Effort): RICE adds another layer of detail by bringing Reach into the mix—how many users will this change actually touch in a given period? It also swaps "Ease" for Effort, which is a more honest measure of the total time required from everyone on the team.

Think of it this way: a team using RICE might compare a small UI tweak to a major feature overhaul. The tweak could have a low Impact but a massive Reach and low Effort, making it a perfect quick win. The huge new feature, on the other hand, might have a monster Impact but lower initial Reach and a ton of Effort, slotting it in as a longer-term strategic bet.

The real magic of these frameworks isn't the final score. It's the conversation they spark. When your team has to debate the "Impact" or "Effort" scores, it forces everyone to align on what truly matters to users and the business.

Comparing Prioritization Frameworks

Choosing between ICE and RICE often comes down to your team's maturity and the type of projects you handle. Here’s a quick breakdown to help you decide.

Framework | Factors Considered | Best For |

|---|---|---|

ICE | Impact, Confidence, Ease | Teams new to prioritization, projects with less quantifiable user data. |

RICE | Reach, Impact, Confidence, Effort | Data-driven teams, projects where user numbers are a key decision factor. |

Ultimately, both frameworks push you toward more objective decision-making. Don't be afraid to start with ICE and graduate to RICE as you gather more user data.

From Feedback to Actionable Tasks

I've seen it happen a thousand times: great feedback gets logged in a spreadsheet or a random Slack channel, only to be forgotten forever. To make sure this doesn't happen, you need a workflow that directly connects your feedback tool to where the work actually gets done.

With a tool like Beep, for example, every piece of visual feedback can be turned into a task with a single click. Thanks to integrations with platforms like Jira, Notion, or Asana, that feedback—complete with the original screenshot and all the technical context—pops right into your team's existing queue. It’s a simple way to close the loop and ensure a brilliant idea never slips through the cracks.

If you want to dive deeper, we have a whole guide on how to prioritize product features with proven steps.

The key is finding a rhythm that balances quick wins with bigger, more strategic projects. Exploring different strategies for improving work efficiency will help your team focus on what's most impactful, ensuring all your improvement efforts deliver real, measurable results.

Putting Changes into Action and Seeing What Sticks

This is it. All the planning, feedback sessions, and tough prioritization calls lead to this moment. Shipping a change is the exciting part, no doubt. But it's only half the story.

The most important piece of the entire continuous improvement puzzle is figuring out if your change actually worked. If you skip this, you’re just throwing features into the dark and hoping for the best.

Good implementation isn't about one big, dramatic launch where everyone holds their breath. It’s a deliberate, step-by-step process. To do it right, modern teams rely heavily on solid continuous integration and delivery practices. You can explore top CI/CD pipeline best practices to see how this agile approach takes a ton of the risk out of the equation and helps you learn way faster.

The whole idea is to test your assumptions on a small scale before you bet the farm on a full rollout. That's how you make sure you're building something people genuinely want.

Validate Your Ideas with A/B Testing

One of the best tools you have for measuring impact is A/B testing. Instead of pushing a new design live for everyone, you show the new version (Variant B) to a slice of your users. The rest keep seeing the original (Control A).

This lets you directly compare how people behave. Does the new version get you closer to your goal?

Let’s say your objective was to "reduce cart abandonment." You could test a new checkout button design. After the test runs its course, you'll know with real statistical confidence if the new button led to more sales. This kind of data-driven proof takes all the guesswork—and ego—out of your decisions.

Track Metrics That Actually Matter

You can't know if you succeeded if you don't track the right numbers. A classic mistake is getting obsessed with "vanity metrics" like page views, which sound nice but don't mean much for the business. You have to be disciplined here.

Here’s what that looks like in the real world:

Goal: Cut down on support tickets about a confusing UI element.

Metric to Track: The number of support tickets tagged with that specific issue. Did it drop by the percentage you were aiming for after the launch?

Goal: Get more people to use a new feature.

Metric to Track: Daily active users (DAU) of that specific feature or the average time they spend using it.

Measuring impact is about answering one simple question: "Did we achieve the outcome we set out to achieve?" If you can't answer that with data, the loop isn't closed.

The payoff for this kind of discipline is huge. Take the University of Virginia, for example. They completed 275 improvement initiatives using a structured process, which led to a whopping $82.1 million in cumulative savings. When companies truly commit, 75% see long-term benefits.

Don’t Forget to Close the Loop

Finally, there’s one last step that’s so easy to forget but so incredibly powerful: talking to your users again.

If you implement a change because someone gave you feedback, let them know! A quick, "Hey, we squashed that bug you reported," or "Good news! We just launched that feature you asked for," builds an incredible amount of loyalty.

It’s a small gesture, but it shows people you're actually listening and that their input matters. This makes them way more likely to give you great feedback again. It turns them from passive users into active partners in making your product better, which kicks off the whole cycle again.

Building a Lasting Culture of Improvement

Look, running a few improvement projects is one thing. But the real game-changer? Getting those continuous improvement process steps baked into your team's DNA. The goal is to stop treating improvement like a special event and start making it an automatic, everyday habit. Trust me, this kind of cultural shift doesn't happen by accident. It takes deliberate, consistent effort.

It all starts at the top. Leadership has to build an environment of psychological safety where every single person on the team feels they can flag an issue or pitch an idea without getting shot down. When people see their feedback is actually welcomed—and acted on—they start proactively looking for ways to make things better.

Weave Improvement into Your Daily Grind

If you want improvement to become a habit, you have to build it into your existing routines. Don't add a bunch of new meetings to everyone's calendar. Instead, just make your current ones better.

Sprint Retrospectives: Carve out time in every retro to talk about the process, not just the product. Ask questions like, "Where did we get stuck this sprint?" or "How could our feedback loop be faster and tighter?"

Planning Sessions: As you're planning new work, schedule time for small improvement experiments. This makes iteration feel like a core part of the development cycle, not some extra thing you do when you have time.

This approach makes continuous improvement a real, tangible part of the job, not just a nice idea you talk about once a quarter.

The true sign of an improvement culture isn't perfect execution. It's a relentless curiosity—a team that's always asking, "Is there a better way to do this?" and feels safe enough to find out.

Celebrate the Wins, Learn from the Bumps

How you react to outcomes is everything. You have to publicly celebrate the wins—no matter how small—to show that the effort is worth it. And when an experiment doesn't pan out? Treat it as a learning opportunity, not a failure. Dig into why it didn't work without pointing fingers. This is how you encourage people to take smart risks and be honest when things go sideways.

This mindset is becoming the norm. A recent comprehensive survey found that 69.89% of organizations are already practicing continuous improvement. What's more, over 90% of them report better onboarding and training, which just goes to show that these structured steps build stronger, more capable teams. You can read the full research about these organizational benefits to see the bigger picture.

Ultimately, a culture that lasts is built on trust and a sense of shared ownership. It’s that moment when your whole team truly believes that constant, tiny steps forward are what lead to massive success in the long run.

Common Continuous Improvement Questions

Diving into a continuous improvement process can bring up a few questions, especially when you're just getting your feet wet. I hear this one a lot: "Is all this formal process stuff too heavy for a small, agile team like ours?"

Honestly, you don't need a huge organization to see the benefits. These principles scale down beautifully. A lean startup can run the same Define-Gather-Prioritize-Measure loop just as well as a massive corporation—and usually, you can do it a whole lot faster.

Another big question that always comes up is about who owns what. Things can get messy if responsibilities aren't clear. While roles can definitely be flexible, a typical breakdown helps keep things on track.

Who's Responsible for Each Phase?

While everyone on the team chips in, certain people usually step up to drive each part of the process forward. This prevents things from getting stuck in limbo.

Defining Goals: This is usually on the Product Manager or team lead. They’re the ones making sure every objective ties back to the bigger business goals.

Gathering Feedback: While the whole team should have their ear to the ground, UX/UI Designers and Researchers often take the lead here, digging into both the numbers and the user stories.

Prioritizing Work: The Product Manager typically steps in again to run prioritization meetings. They'll use different frameworks to help the team agree on what’s most important.

Implementing & Measuring: Developers and Engineers are on the hook for the build, while the Product Manager and any Analysts keep a close eye on the metrics to see if the changes actually worked.

The specific titles don't matter as much as having clear ownership. Someone has to be accountable for moving each step forward, otherwise, the whole process just grinds to a halt.

Finally, people often ask how to get started without getting tangled up in complex frameworks. My advice? Keep it simple. Start small. Pick one nagging problem, run it through the cycle, and see what happens. That first small victory is all you need to build momentum.

Ready to make your feedback process faster and clearer? Beep lets your team drop visual, actionable feedback directly on your site, turning suggestions into tasks in seconds. Get started for free at justbeepit.com.

.png)

Comments