Web Usability Testing: Boost UX & Conversions

- Farid Shukurov | CEO @ Beep

- Nov 7, 2025

- 16 min read

Ever get that feeling you've built something amazing, only to watch people stumble through it? That’s where web usability testing comes in. It’s the simple, powerful act of watching real people use your website to see where they get stuck, confused, or just plain frustrated.

This isn’t about focus groups or asking for opinions. It's about observing actual behavior to find those sneaky little problems that data analytics alone will never show you.

Why Web Usability Testing is a Strategic Imperative

Imagine a chef who never tastes their own food. They can follow the recipe perfectly, use the best ingredients, but without a quick taste, they have no idea if it’s actually any good. Launching a website without usability testing is pretty much the same thing. Your team might build something that’s technically perfect, but you're just guessing if it truly works for your users.

The whole point is to put your site in front of real people and watch them try to get things done. We’re not asking if they like the color of a button; we’re watching to see if they can even find it in the first place.

Uncovering What Analytics Can't See

Analytics dashboards are incredible. They'll tell you what happened—like that 70% of users ditched their shopping cart on the payment page. But they can’t tell you why.

Was the "Apply Coupon" field a total mess? Did the credit card form keep spitting out a confusing error? Was the "Next" button hidden below the fold on their phone? That's the stuff analytics misses.

Web usability testing fills in those gaps. By watching someone’s screen, listening to them think out loud, and seeing where they hesitate, you get to the root of the problem. These "aha!" moments are pure gold for making things better.

"Usability testing is the practice of getting a representative user to use your product and observing them, to see where they get stuck and what they find easy. It’s the most fundamental and valuable user research method."

The Massive Return on Investment from Testing

Let’s be honest, fixing usability issues isn't just about making people happy—it’s about making money. A confusing checkout process kills sales. An impossible-to-find contact form means you're losing leads. A navigation menu that makes no sense sends frustrated visitors straight to your competitors.

Investing in usability testing is one of the smartest, most cost-effective moves you can make. The research is pretty staggering: for every $1 you put into UX and usability, you can get up to $100 back. That's a 9,900% ROI. It’s not an expense; it’s an investment. You can find more insights on the financial impact of usability testing and see how it can directly benefit your bottom line.

By finding and fixing these friction points, usability testing delivers a serious punch:

Higher Conversion Rates: When the path to purchase is smooth and intuitive, more people will actually complete it. Simple as that.

Better Customer Loyalty: A website that’s easy and enjoyable to use keeps customers coming back. You're not just a transaction; you're building a relationship.

Less Wasted Development Time: It is exponentially cheaper to fix a design flaw during the prototype phase than it is after everything has been coded and launched.

At the end of the day, usability testing is your insurance policy against building something nobody can actually use. It ensures the website you create works for the most important people of all: your customers.

Choosing the Right Usability Testing Method

Picking the right web usability testing method is a bit like choosing the right tool for a job. You wouldn't use a sledgehammer to hang a picture frame, right? In the same way, your testing approach needs to match your specific goals, budget, and timeline. The choice you make will completely shape the kind of insights you get back.

Your first big decision is whether to go with a moderated or unmoderated test. Think of it as the difference between a guided museum tour and exploring the exhibits on your own. Each one gives you a totally different experience and has its own set of perks.

Moderated vs Unmoderated Testing

A moderated usability test is that guided tour. A trained facilitator is right there—either in person or remotely—guiding the participant, asking follow-up questions, and digging deeper into what they're thinking. This method is fantastic for figuring out the "why" behind what users do. When a user pauses, the moderator can jump in and ask, "What's going through your mind right now?" This gives you rich, qualitative feedback that’s almost impossible to get any other way.

On the other hand, unmoderated usability testing is like exploring the museum alone with a map. Participants complete tasks on their own time, usually from their own homes, while their screen and voice are recorded. This approach is way faster, easier to scale, and a whole lot cheaper. You can gather data from dozens or even hundreds of users in a fraction of the time, making it perfect for validating specific user flows or grabbing quantitative data.

The core trade-off here is really depth versus scale. Moderated testing delivers deep, nuanced insights from a small group, while unmoderated testing provides broader, numbers-driven data from a much larger one.

Remote vs In-Person Sessions

Another key choice is whether to run your test remotely or in person. This decision usually boils down to logistics, your budget, and whether you need to see those subtle non-verbal cues.

Remote Testing: This lets you test with users from literally anywhere in the world, which massively expands your participant pool and slashes costs. It's the go-to method for modern web usability testing simply because it's so convenient and quick.

In-Person Testing: This brings the user into a controlled lab environment. It’s a great way to observe their body language, facial expressions, and other small reactions you might miss over a video call. It's more expensive and takes more time, but for testing complex products, it can be invaluable.

Choosing the right method is a fundamental piece of a strong research process. For anyone looking to build a solid framework from the ground up, our guide can help you master the UX research process for better design. And once you've got the basics down, exploring a wider range of essential UX design methods will give you a more complete toolkit for making real improvements.

Comparison of Web Usability Testing Methods

To help you figure out what's best for your project, I've put together a quick comparison table. This breaks down the different methods to help you choose the right one based on your goals.

Method | Best For | Key Advantage | Key Disadvantage |

|---|---|---|---|

Moderated In-Person | Deep qualitative insights and complex task analysis. | Ability to observe non-verbal cues and probe deeply. | Expensive, time-consuming, and geographically limited. |

Moderated Remote | Exploring user motivations and complex user flows with a wide audience. | Balances deep insights with geographic flexibility. | Can be prone to technical issues; misses some non-verbal cues. |

Unmoderated Remote | Quick validation, benchmarking, and gathering quantitative data at scale. | Fast, affordable, and easy to scale with large sample sizes. | Lacks the ability to ask follow-up questions or clarify user behavior. |

At the end of the day, there’s no single "best" method. The ideal choice depends entirely on what you’re trying to find out. If you need to understand why users are ditching their shopping carts, a moderated session is perfect. But if you just want to know how many users can add an item to their cart in under 30 seconds, an unmoderated test will get you the numbers you need, fast.

Alright, you've got the theory down. Now it's time to roll up our sleeves and put it into practice. Running a successful usability test isn't just about throwing a prototype at someone and hoping for gold. It's a deliberate process, and this blueprint will walk you through it so you get clear, unbiased, and genuinely actionable results.

Think of it this way: the entire process rests on a solid foundation. If you don't know what you're trying to achieve, you'll just collect a pile of random feedback that leads nowhere. And if you test with the wrong people? You'll end up solving problems for an audience that isn't even yours.

Setting Clear Objectives

Before you write a single task or recruit a single participant, you have to know exactly what you’re trying to learn. Vague goals just lead to vague, wishy-washy results. A great test always starts with a sharp, focused objective that guides every single decision you make from here on out.

So, instead of a broad goal like, "See if users like our new homepage," you need to get specific and measurable. A much stronger objective would be something like, "Determine if users can find and understand our new pricing plans within 60 seconds."

Good objectives usually come from a real question or a known business problem:

Business Problem: Our shopping cart abandonment rate is 30% higher on mobile devices.

Test Objective: Pinpoint the friction spots in the mobile checkout flow that are causing users to drop off.

This kind of focus makes sure your web usability testing is aimed at solving real issues, not just collecting opinions.

Recruiting the Right Participants

The insights you get from your test are only as good as the people you recruit. Sure, testing with your internal team or your friends is convenient, but their built-in knowledge of your product will completely skew the results. You need real people who represent your actual target audience.

Take a moment to think about the key traits of your ideal user. What are their demographics? How tech-savvy are they? What motivates them? Use this to build a screener survey with specific questions that filter out anyone who isn't a good fit.

One of the biggest myths is that you need a huge group of participants. The truth is, you can uncover an incredible amount with just a small, well-chosen group.

User testing is almost spookily effective at finding usability problems, even with tiny sample sizes. The legendary usability expert Jakob Nielsen and his Nielsen Norman Group famously found that testing with just five users can uncover about 85% of the most critical usability issues. This single insight changed the game, making research way more accessible and affordable for everyone.

Writing Effective Task Scenarios

Task scenarios are the absolute heart of your usability test. They give participants context and guide them through the key flows you want to check out. The trick is to create realistic situations that encourage natural behavior without leading them straight to the answer.

A poorly written task just gives the solution away. For example, telling a user, "Click on the 'Products' menu, find the 'Blue Widget,' and add it to your cart" isn't a task—it's an instruction manual.

Here’s a much better way to frame it: "Imagine you need a new tool for your workshop. Use the website to find a blue widget and begin the purchase process."

See the difference? This scenario-based task encourages them to explore and reveals their actual thought process. It shows you whether your navigation makes sense and if your product categories click with a real person.

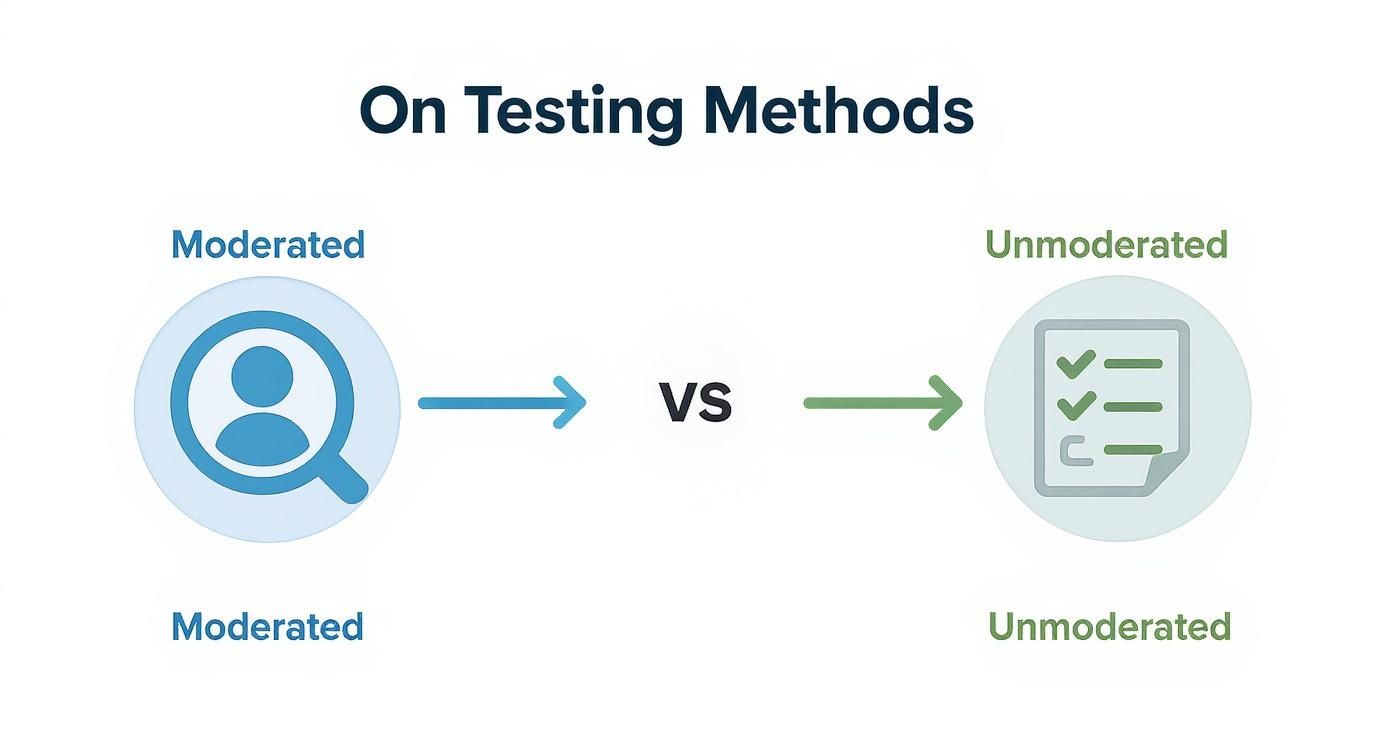

The infographic below breaks down the decision between moderated and unmoderated tests, which is a key choice you'll make during planning.

This visual shows how moderated testing is great for getting deep, qualitative feedback, while unmoderated testing is perfect for collecting quantitative data at a larger scale.

Moderating the Session with Confidence

If you're running a moderated test, your role as the facilitator is everything. Your job is to make the participant feel comfortable, gently guide them through the tasks, and probe for deeper insights—all without influencing what they do.

Here’s a basic script structure you can adapt:

Welcome and Intro (5 minutes): Explain what the session is for, reassure them that there are no wrong answers, and get their consent to record. Always emphasize that you're testing the website, not them.

Background Questions (5 minutes): Ask a few easy questions about their habits related to your product. This is great for building rapport.

The Tasks (30-40 minutes): Introduce each task scenario one at a time. Encourage them to "think aloud" as much as they can.

Post-Test Wrap-up (5-10 minutes): Ask for their overall impressions and thank them sincerely for their time.

During the session, learn to love the silence. When a user pauses, resist every urge to jump in and help them. Just give them space to work through the problem on their own—that’s where the magic happens and the most valuable insights are hiding. For a resource that covers everything from planning to analysis, check out this complete guide on how to conduct usability testing.

Essential Tools for Modern Usability Testing

Let's be real—the right tech can take your web usability testing from a total headache to a smooth, insightful process. Picking the perfect tool isn't about finding the one with a million features. It's about finding the one that actually fits your goals, your budget, and the kind of testing you want to do.

Luckily, we're in a good spot. The market for these tools is booming—it was valued at around $1.51 billion in 2024 and is expected to shoot up to over $10.41 billion by 2034. You can discover more insights about the usability tools market to see just how fast things are moving.

This explosion in options means you've got a serious arsenal at your disposal to figure out what users are really thinking.

All-in-One Testing Platforms

If you're looking for a do-it-all solution, all-in-one platforms are the way to go. I'm talking about tools that handle almost everything, from finding participants to crunching the data. They make the whole process incredibly efficient.

Platforms like UserTesting and Maze are the heavy hitters here. They let you run both moderated and unmoderated studies, so you can do deep-dive interviews one day and gather broad quantitative data the next. The biggest win? Everything is in one place.

Plus, they often come with a huge panel of pre-screened testers, which saves a ton of time and hassle when it comes to recruitment.

Session Recording and Heatmap Tools

Sometimes, the best insights come when you're just a fly on the wall. Session recording tools like Hotjar let you watch anonymized recordings of real user sessions. You see exactly where people click, scroll, and get stuck, all without a formal test setup.

These tools are gold for spotting weird user behaviors and friction points you never would've thought to test for. Heatmaps then pull all that click-and-scroll data together visually, showing you in seconds which parts of your page are hot and which are not.

A key benefit of session recording is its ability to capture authentic, unprompted user behavior. It’s like being a fly on the wall, seeing how people interact with your site when they think no one is watching.

This approach is a great sidekick to formal usability testing. It gives you a constant stream of real-world data to form hypotheses that you can dig into later with more structured tests.

Visual Feedback and Collaboration Tools

Okay, let's talk about one of the biggest pains in usability testing: turning vague feedback into actual tasks for your team. This is where visual feedback tools totally shine. They close the gap between what a user says and what a developer needs to do.

Tools like Beep let anyone—stakeholders, testers, you name it—click on a live website and drop a comment right there. Every piece of feedback automatically grabs a screenshot and all the technical details like browser info, which cuts out all that frustrating back-and-forth.

This visual-first approach turns a comment like "the button doesn't work" into a task that has all the context baked right in. It makes it super easy to see exactly what needs fixing.

By keeping all the feedback right on the visual interface, teams can quickly build a prioritized to-do list. If you want to explore more options, check out our guide on the 10 best website feedback tools to enhance user experience. At the end of the day, having the right set of tools just makes your whole testing process sharper and way more effective.

Analyzing Results and Reporting Your Findings

Running the tests is a huge milestone, but let's be real—the real work kicks in after that last participant logs off. You’re now sitting on a mountain of screen recordings, scribbled notes, and user quotes. That's all just raw data. The magic happens when you turn that pile of observations into a story so clear and compelling it forces people to make changes.

This is the detective phase. You're moving beyond one-off incidents, like a single user fumbling with a button, to find the patterns that trip up lots of people.

Your ultimate goal isn't just to write a report. It's to build an argument for improvement so strong that stakeholders have no choice but to listen.

From Raw Data to Actionable Insights

First things first, you need to get organized. It's incredibly easy to get swamped by all the feedback, so you need a game plan. The secret is blending your qualitative and quantitative data to see the whole story.

Qualitative Data: This is the "why" behind what users did. Think direct quotes ("I swear I looked everywhere for the search bar!"), sighs of frustration you heard over the mic, and their running commentary. This is where the human side of the usability problems really shines through.

Quantitative Data: This is the hard evidence—the "what" and "how many." Metrics like task completion rates, time on task, and the number of errors give you concrete proof of where the biggest fires are.

Start combing through everything and look for things that keep popping up. Did three out of your five testers get stuck on the exact same checkout step? Did more than one person call the homepage "cluttered" or "confusing"? These recurring themes are your golden nuggets.

Your job is to find the signal in the noise. A single user's opinion is an anecdote, but a problem encountered by multiple users is a pattern demanding attention.

Once you spot these patterns, you can start framing them as specific usability issues. Don't just say, "Users had trouble." Get specific: "The navigation labels are ambiguous, causing users to guess where to click."

Prioritizing What to Fix First

Trust me, you'll probably end up with a laundry list of problems, and you can't fix them all at once. Prioritizing is absolutely essential if you want to make an impact without completely overwhelming your dev team. I've found a simple but powerful way to do this is to rank each issue by its severity and frequency.

For every problem you've found, ask yourself two simple questions:

How many users did this affect? (Frequency)

How badly did this stop them from doing what they wanted to do? (Severity)

A minor typo that only one person noticed? That's low-priority. A broken "Add to Cart" button that blocked every single participant? That's a code-red, all-hands-on-deck emergency. This little exercise helps you zero in on the fixes that will give you the biggest bang for your buck.

Crafting a Compelling Usability Report

Your final report is the bridge between your research and actual, tangible improvements. A dry, 50-page document filled with text is going to get skimmed and forgotten. To really get buy-in, your report needs to be visual, straight to the point, and focused on solutions.

A great report that gets things done usually includes:

Executive Summary: A one-pager with the absolute most critical findings and your top recommendations. Put this right at the beginning so busy execs can get the main takeaway in 60 seconds.

Methodology Snapshot: Briefly cover who you tested with and what you asked them to do. This is all about building trust in your results.

Key Findings: Present each major issue as its own point. This is where you bring in the evidence—screenshots, juicy user quotes, and if you can, short video clips. Seriously, nothing hits harder than a 15-second video of a real user struggling.

Actionable Recommendations: For every problem you highlight, offer a clear, specific suggestion for how to fix it. Don't say, "Improve the navigation." Instead, try, "Rename the 'Resources' menu to 'Case Studies' to better match what users were looking for."

Presenting your findings is an art. If you want a structured way to pull all this together, using a template can be a lifesaver. You can check out a great user testing feedback template to improve UX insights that helps organize your report. When you turn your observations into a clear story, your hard work actually leads to a better website for everyone.

Common Usability Testing Mistakes to Avoid

Even the most carefully planned web usability testing can get derailed by a few common, yet totally avoidable, mistakes. Trust me, I've seen it happen. Dodging these classic blunders is the key to getting data you can actually rely on. The quality of your insights hangs entirely on the integrity of your process.

One of the biggest face-palm moments is testing with the wrong people. It’s super tempting to just grab a colleague from down the hall or a friend for some "quick feedback," but here's the hard truth: they are not your users. They waltz in with insider knowledge and biases that a real customer would never have, giving you skewed results that are pretty much useless in the real world.

Asking Leading Questions

Another classic pitfall is asking leading questions. It's subtle, but the way you phrase something can completely poison the well, guiding a participant toward the answer you want to hear. The whole point is to see what people do naturally, not to have them validate your assumptions.

For example, asking, "Wasn't that checkout process easy?" basically corners them into agreeing with you. A much better, more neutral approach is, "How was your experience with the checkout process?" This open-ended question invites them to give you the good, the bad, and the ugly—which is exactly what you need.

A usability test is not a sales pitch. The moment you start trying to convince the participant that your design is good, you’ve stopped collecting data and started influencing it.

Confusing Opinions with Behavior

Finally, a mistake I see all the time is focusing on what users say instead of what they do. People are generally polite. They don’t want to hurt your feelings by telling you your baby is ugly. So they might tell you they love a feature, right after you watched them struggle and swear at the screen for five straight minutes trying to use it.

This is exactly why observation is the heart and soul of usability testing. Their actions—where they click, where they hesitate, where they get frustrated and backtrack—tell a story that’s a thousand times more honest than their words.

What they say: "Oh yeah, the navigation makes total sense."

What they do: Clicked the wrong menu item three times before finally stumbling upon what they needed.

Always, always prioritize observed behavior over subjective opinions. You’re not here to collect compliments; you're here to find and fix the friction. Steer clear of these common slip-ups, and your testing will deliver the kind of clear, actionable insights that lead to real-deal improvements.

Got Questions About Usability Testing?

Diving into your first usability test can bring up a few last-minute questions, even when you think you've got it all planned out. I've been there! Let's clear up some of the most common head-scratchers so you can get started with total confidence.

How Many Users Do I Really Need for a Test?

This is the big one, and the answer is probably fewer than you think. For most qualitative tests—where your goal is to find and fix usability problems—you'd be shocked by what you can learn from just 5 users. Seriously. That small group will typically uncover about 85% of the most common issues.

Now, if you're running a quantitative test to gather hard stats and prove a hypothesis, that's a different story. For that, you'll need a much bigger sample size, usually 20 users or more, to get data that’s statistically significant.

What’s This Going to Cost Me?

The cost of usability testing can swing wildly. It really all depends on the path you take.

You could run a simple, unmoderated remote test using an online platform for just a few hundred dollars. On the other end of the spectrum, a fully moderated, in-person study can run into the thousands once you factor in the moderator's time, paying your participants, and renting a space.

When Is the Best Time to Run a Usability Test?

Honestly? Yesterday. But the next best time is right now.

The most effective strategy is to test early and test often, weaving it into your entire design process. There's value at every single stage, whether you're validating rough ideas on a basic prototype or fine-tuning the little details on a high-fidelity mockup. Kicking things off early is always the smartest move—it's how you catch those costly mistakes before they ever make it into development.

.png)

Comments